This guide shows you how to deploy a chatbot that lets you query your PostgreSQL database using natural language. You will build a system that accepts chat messages, retains conversation history, constructs dynamic SQL queries, and returns responses generated by an AI model. By following these instructions, you will have a working solution that integrates n8n’s AI Agent capabilities with PostgreSQL.

Prerequisites

Before you begin, ensure that you have the following:

- An active n8n instance (self-hosted or cloud) running version 1.50.0 or later.

- Valid PostgreSQL credentials configured in n8n.

- API credentials for the Groq Chat Model (or your preferred AI language model).

- Basic familiarity with SQL (specifically PostgreSQL syntax) and n8n node concepts such as chat triggers and memory buffers.

- Access to the n8n Docs on AI Agents for further reference.

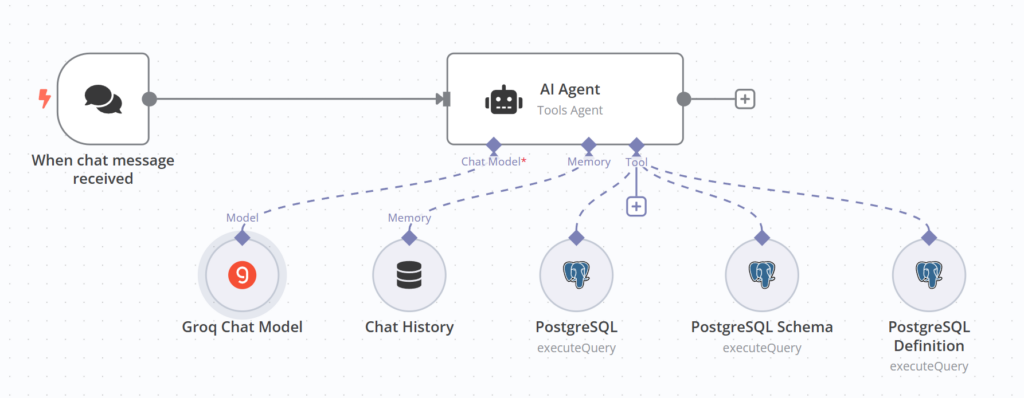

Workflow Setup

- Chat Interface & Trigger

- When Chat Message Received: This node listens for incoming chat messages via a webhook. When a message arrives, it triggers the workflow immediately.

- Conversation Memory

- Chat History: This memory buffer node stores the last 10 interactions. It supplies conversation context to the AI Agent, ensuring that responses consider previous messages.

- AI Agent Core

- AI Agent (Tools Agent): The AI Agent node orchestrates the conversation by receiving the chat input and conversation history. It dynamically generates PostgreSQL-compatible SQL queries based on your requests and coordinates calls to external tools (such as PostgreSQL nodes).

- Database Interactions

- PostgreSQL Node (Query Execution): This node executes the SQL query generated by the AI Agent against your PostgreSQL database. You reference the query using an expression (e.g.,

{{$node["AI Agent"].json.sql_query}}), allowing the agent’s output to control data retrieval. - PostgreSQL Node (Schema Retrieval): This node (or a dedicated step using the PostgreSQL node) retrieves a list of relevant tables from your PostgreSQL database (e.g., from the

publicschema, excluding system schemas likepg_catalogorinformation_schema). The agent uses this information to understand the available tables. This typically involves executing a query likeSELECT table_name FROM information_schema.tables WHERE table_schema = 'public';. - PostgreSQL Node (Table Definition Retrieval): This node (or another dedicated step using the PostgreSQL node) fetches detailed metadata (such as column names, data types, and potentially relationships using foreign keys) for a specific table. The table name (and schema if necessary) is supplied dynamically by the AI Agent. This often involves querying

information_schema.columns, e.g.,SELECT column_name, data_type FROM information_schema.columns WHERE table_name = '{{dynamic_table_name}}' AND table_schema = 'public';.

- PostgreSQL Node (Query Execution): This node executes the SQL query generated by the AI Agent against your PostgreSQL database. You reference the query using an expression (e.g.,

- Language Model Processing

- Groq Chat Model: This node connects to the Groq Chat API to generate text completions. It processes the combined input (chat message, context, and data fetched from PostgreSQL) and produces the final response.

- Guidance & Customization

- Sticky Notes: These nodes provide guidance on:

- Switching the chat model if you wish to use another provider (e.g., OpenAI or Anthropic).

- Adjusting the maximum token count per interaction.

- Customizing the SQL queries (ensuring PostgreSQL compatibility) and the context window size.

- They help you modify the workflow to suit your environment and requirements.

- Sticky Notes: These nodes provide guidance on:

Workflow Connections

- The Chat Trigger passes the incoming message to the AI Agent.

- The Chat History node supplies conversation context to the AI Agent.

- The AI Agent calls the PostgreSQL nodes as external tools, generating and sending dynamic SQL queries.

- The Groq Chat Model processes the consolidated input from the agent and outputs the natural language response delivered to the user.

Testing the Workflow

- Send a chat message using the chat interface.

- Observe how the AI Agent processes the input and generates a corresponding PostgreSQL SQL query.

- Verify that the PostgreSQL nodes execute the query correctly against your database and return data.

- Confirm that the Groq Chat Model produces a coherent natural language response based on the query results.

- Refer to the sticky notes for guidance if you need to fine-tune any node settings or SQL queries.

Next Steps and References

- Customize Your AI Model: Replace the Groq Chat Model with another language model (such as the OpenAI Chat Model) by updating the node credentials and configuration.

- Enhance Memory Settings: Adjust the Chat History node’s context window to retain more or fewer messages based on your needs.

- Modify SQL Queries: Update the SQL queries within the PostgreSQL nodes or refine the prompts for the AI Agent to ensure they match your specific database schema and desired data, adhering to PostgreSQL syntax.

- Further Reading: Consult the n8n Docs on AI Agents for additional details and examples to expand your workflow’s capabilities.

- Set Up a Website Chatbot: Copy & Paste and replace the placeholders in the following code to embed the chatbot into your personal or company’s website: View in CodePen 🡥

By following these steps, you will deploy a robust AI chatbot workflow that integrates with your PostgreSQL database, allowing you to query data using natural language.

Leave a Reply